Work is energy transfer. But what happens when work is done on an object such that its height above the ground doesn't change? The answer is that only kinetic energy is transferred into or out of the object. And since work is the total amount of energy transfered into or out of the object, it therefore follows that the work is just equal to the object's change in kinetic energy.

Genesis of the Elements

During the 20th century, scientists discovered the genesis of the elements in the periodic table. Einstein's theory of gravity precipitated a revolution and renascence period in cosmology; it transformed our picture of the large-scale universe. We learned that, contrary to the ideas which has prevailed for centuries since Newton's time, the universe is actually expanding and that in some distant epoch—the earliest moments of the young universe—all of the matter and energy in the universe must have been on top of each other and concentrated into a single, very small amount of space no bigger than the size of a grape fruit. The temperatures and pressures were so extreme in this early universe that hydrogen and helium could be formed. Later, the universe cooled and vast aggregates of atoms condensed into galaxies and stars. In the latter-half of the 20th century, we learned that the heavier elements in the periodic table were created in the nuclear furnaces and death-roes of the stars. We really are made of star stuff!

Snowball Earth

The Proterozoic Eon is a sweep of time beginning when the Earth was 2.5 billion years old and ending when the Earth was 542 million years. During the first 500 million years, cyanobacteria and photosynthesis were invented which oxygenated the world. The proceeding one billion years was a time of enigmatic calm as the Earth did not change much. But in the last roughly 350 million years, the Earth's systems were spun into a whirlwind. The Earth experienced one of the most dramatic series of ice ages in its history and turned into a giant, white, ice ball. But after these ice ages ended, a second great oxidization event occurred. The last step was taken in the march from the simple to the complex: there was finally enough oxygen to support large, multi-cellular creatures and the Ediacaran fauna emerged.

Early Earth History

After ancient stars exploded, their remnants conglomerated through gravity to one day form a place called Earth. Primordial Earth was a giant, red ball of magma and smoldering rocks; it was hellish and ablaze with erupting volcanoes and fiery skies. But over time hails of comets and asteroids bombarded the Earth to form the oceans causing Earth's outer layer to cool and turn grey; those heavenly bodies also seeded the oceans with rich organic chemistry which, somehow, eventually turned into the first microbe. Nearly one billion years later, photosynthesis was invented—this oxygenated the world, a little, and was the first step in the march towards the emergence of large, complex, multi-cellular organisms.

Colonizing the Moon

In this article, we discuss Moon colonization: the best spots to build infrastructure on the Moon; the advantages of going there; how the Moon's resources could be utilized; and the prospect of an immense lunar city.

Colonizing the Asteroids and Comets of our Solar System

The extraordinary Carl Sagan long ago envisioned in his book, Pale Blue Dot, humanity eventually terraforming other worlds and building settlements on the asteroids and comets in our solar system. He imagined that these little worlds could be perhaps redirected and manuevered—used as little rocky "space ships"—in order to set sail for the stars. In this article, we discuss some of the techniques which could be used towards this telos.

Introduction to Einstein's Theory of General Relativity

General Relativity is hailed by many as one of the greatest achievements of human thought of all time. Einstein's theory of space, time, and gravity threw out the old Newtonian stage of a fixed Euclidean space with a universal march of time; the new stage on which events play out is spacetime, a bendable and dynamic fabric which tells matter how to move. This theory perhaps holds the key to unlocking H. G. Wells time machine into the past; according to Kip Throne, it will pave the way towards the next generation of ultra-powerful telescopes which rely on gravitational waves; and it also perhaps holds the key to breaking the cosmic speed limit and colonizing the Milky Way galaxy and beyond in a comparatively short period of time.

Superconductors: the Future of Transportation and Electric Transmission

Superconductors are the key to unlocking the future of transportation and electrical transmission. They enable the most efficient approaches to these industrial processes known to present science. A maglev vehicle, to borrow Jeremy Rifkin's wording, will shrink the dimensions of space and time by allowing distant continental and inter-continental regions to be accessed in, well, not much time at all. But superconductors also offer unprecedented efficiency: they eliminate the problem of atoms colliding with other atoms and would allow vehicle to "slide" across enormous distances with virtually no loss of energy and it would allow a loop of current to persist longer than the remaining lifetime of the universe. Much of the damage accumulated in the components of vehicles can, in some way or another, be traced to the friction against the road; maglev transportation circumvents this issue.

Thermodynamics and the "Arrow" of Time

The second law of thermodynamics specifies the arrow of time—the direction in which the flow of time runs. Before the discovery of the laws of thermodynamics, there was nothing about classical mechanics which did not allow time to run both ways. Just as a cup could fall on the floor and shatter into many pieces, it was also conceivable that a shattered cup could spontenously resemble and climb back on top of the table without violating the laws of energy and momentum conservation. It was not until the discovery of the second law of thermodynamics that the laws of physically finally declared that events can only happen in one direction, thereby giving time a sense of direction.

Exponential Growth of Information Technology

In this short article, we discuss that the character of an exponential function is such that when it is viewed linearly, it'll start off very gradual and then suddenly "blow up." This character of exponential functions is the reason why the number of internet users seemed to blow up out of nowhere and why the cost and size of computer chips have diminished so rapidly in such a short period of time. But when viewed on a logarithmic scale, nothing appears to "blow up" and behavior, which looks rather wild from a linear view, seemed inevitable when viewed logarithmically; this allowed Ray Kurzweil to predict that the internet would one day have billions of users in a time when perhaps only a few thousand people had access to the internet.

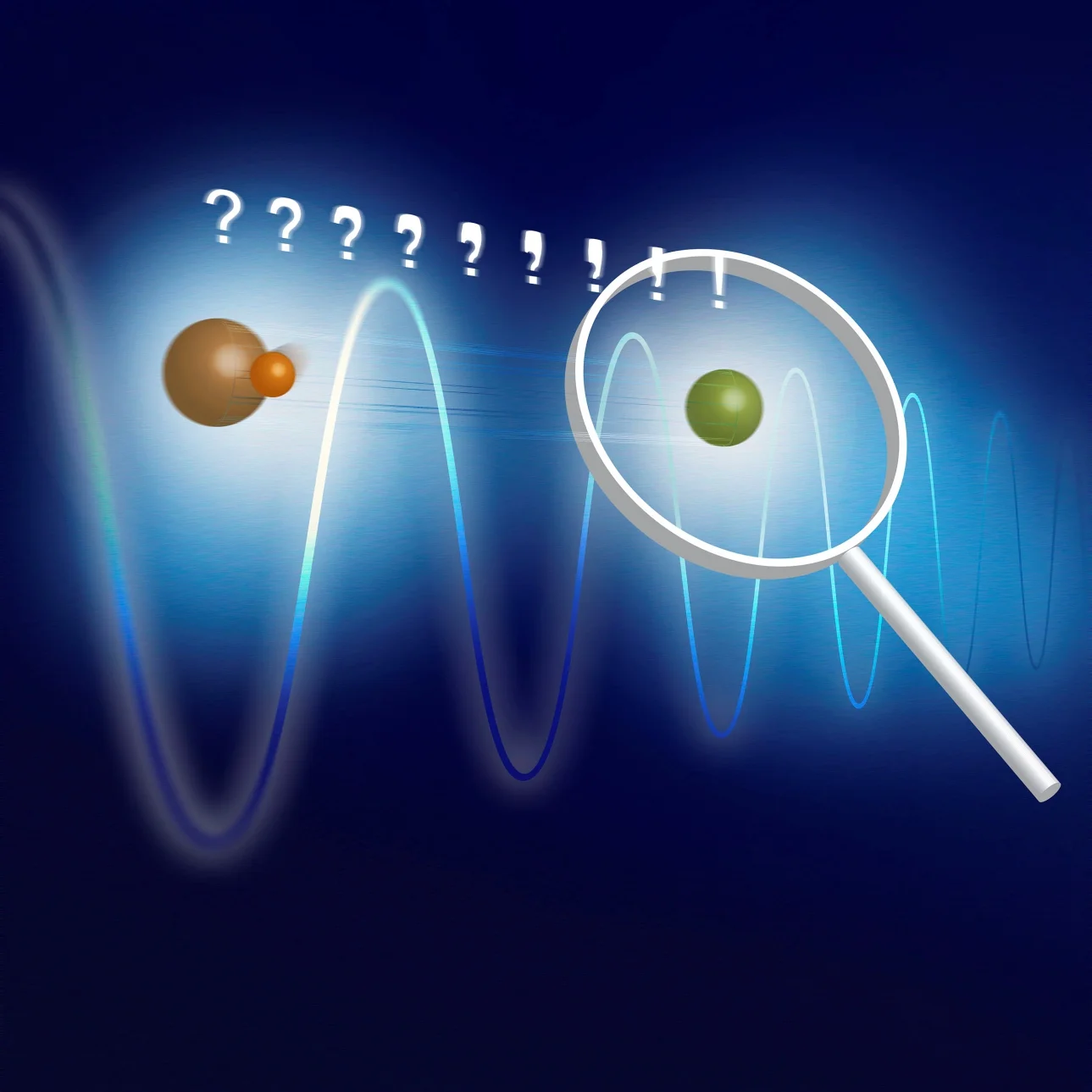

Calculating the Wavefunction Associated with any Ket Vector

In this lesson, we'll derive an equation which will allow us to calculate the wavefunction (which is to say, the collection of probability amplitudes) associated with any ket vector \(|\psi⟩\). Knowing the wavefunction is very important since we use probability amplitudes to calculate the probability of measuring eigenvalues (i.e. the position or momentum of a quantum system).

Schrodinger's Time-Dependent Equation: Time-Evolution of State Vectors

Newton's second law describes how the classical state {\(\vec{p_i}, \vec{R_i}\)} of a classical system changes with time based on the initial position and configuration \(\vec{R_i}\), and also the initial momentum \(\vec{p_i}\). We'll see that Schrodinger's equation is the quantum analogue of Newton's second law and describes the time-evolution of a quantum state \(|\psi(t)⟩\) based on the following two initial conditions: the energy and initial state of the system.

Overview of Single-Variable Calculus

In this lesson, we'll give a broad overview and description of single-variable calculus. Single-variable calculus is a big tool kit for finding the slope or area underneath any arbitrary function \(f(x)\) which is smooth and continuous. If the slope of \(f(x)\) is constant, then we don't need calculus to find the slope or area; but when the slope of \(f(x)\) is a variable, then we must use a tool called a derivative to find the slope and another tool called an integral to find the area.

Periodic Wavefunctions have Quantized Eigenvalues of Momenta and Angular Momenta

The wavefunction \(\psi(L,t)\) is confined to a circle whenever the eigenvalues L of a particle are only nonzero on the points along a circle. When the wavefunction \(\psi(L,t)\) associated with a particle has non-zero values only on points along a circle of radius \(r\), the eigenvalues \(p\) (of the momentum operator \(\hat{P}\)) are quantized—they come in discrete multiples of \(n\frac{ℏ}{r}\) where \(n=1,2,…\) Since the eigenvalues for angular momentum are \(L=pr=nℏ\), it follows that angular momentum is also quantized.

The Eigenvectors of any Hermitian Operator must be Orthogonal

In this lesson, we'll mathematically prove that for any Hermitian operator (and, hence, any observable), one can always find a complete basis of orthonormal eigenvectors.

Origin of Structure and "Clumpyness"

This video was produced by David Butler.\(^{[1]}\) For the transcript of this video, visit: https://www.youtube.com/watch?v=JpNaIzw_CZk

Fundamental questions which have been asked since the beginning of humanity are: How did structure arise in the cosmos? Why is there something rather than nothing? Why is there “clumpyness” in the cosmos (in other words, why is there more stuff here than there)? For a long time, answering such questions would have been impossible. But in the past few decades cosmologists, by using the scientific method and advanced technology, have unraveled these deep and profound mysteries which humans have long pondered since their origin. Let’s start out by answering the second question: Why is there something rather than nothing? The fundamental principles of quantum mechanics predict a myriad of mathematical and physical consequences. One of them is the time-energy uncertainty principle which predicts that over any given time interval, the observed energy of a particle is always uncertain and will be within a range of values because you must give it a “kick” to observe it. The energy of something can never be exactly zero (because then \(ΔE=0\) and the time-energy uncertainty principle would be violated). Therefore, not even the vacuum of empty space can have no energy. This energy of empty space can be transformed into mass (a property of matter) according to Einstein’s mass-energy equivalence principle and the rules of particles physics. Since the possible energy values of any region of space are random within a certain range of values, it follows that the distribution of energy, mass, and matter also must be random throughout space. But if the fundamental principles of quantum mechanics and the time-energy uncertainty principle in particular explain the origin of matter and energy in the universe, then how did the cosmos attain structure and clympyness? Over the next few paragraphs, we’ll begin a discussion which starts with analyzing the predicament (namely, applying quantum mechanics alone predicts that the universe should have been too uniform for galaxies and other structures to arise); then, after that, I’ll take you through a very brief account of how the universe developed structure and clumpyness.

In the earliest epochs of our universe, all of the matter and energy existed in the form of virtual particles popping in and out of existence. If the distribution of this matter was random then, on average, there would be just as many virtual particles over here as over there. But in order for structure to arise in the cosmos, the distribution of matter must start out clumpy and not almost completely uniform as the uncertainty principle predicts. The uncertainty principle predicts that the distribution of matter and virtual particles throughout space starts out more or less uniform. This seems to contradict today’s observations since if all of the matter density is initially uniform, it’ll more or less stay uniform. The Big Bang theory is widely regarded as one of the greatest triumphs in science of the 20th century. It successfully predicted an enormous range of things: the CMBR; where the light elements came from as well as the correct amounts and proportion of each of them; and the observed redshifts and recessional velocities of distant galaxies. But there were many things which the Big Bang theory could not account for such as how the universe got to its present size, how the recorded temperatures of the CMBR are so uniform, and how the CMBR has slight fluctuations to one part in \(10^5\). Cosmologists generally agree that we must postulate the existence of a physical mechanism which correctly predicts all of these observed features. This is precisely what inflationary theory accomplishes. Many have criticized the physical mechanisms postulated by inflationary theory as ad hoc despite the success in inflationary theory of predicting what has been experimentally observed.

The universe is presently 13.8 billion years old and we are causally connected with all of the matter and energy within a sphere whose radius is 13.8 billion light years. When the universe was only one second old, its temperature was \(10^{10}K\); the photons buzzing around back then were 3.7 billion times hotter than their present temperature today of \(2.725K\). By Boltzman’s relationship \(E∝T\), Plank’s relationship \(E∝λ\), and the relationship between wavelength and the scaling factor (\(λ∝a^{-1}\)), it follows that the entire observable universe must have been 3.7 billion times smaller than it is today—or about only 3.7 light years in radius. Back in that distant epoch, since the universe was only one second old, photons emitted by charged particles could, at most, have travelled a distance of one light second. The gravitational field generated by energetic particles propagates at the speed of light and, therefore, would have had enough time to only spread out a distance of one light second. Any signal emitted by an energetic particle therefore could not travel farther than one light second and could not communicate with other energetic particles more than one light second away. Therefore, any pair of energetic particles separated by more than one light second from one another were causally disconnected.

The causal disconnection between matter and energy in a region of the universe 3.7 light years in radius when it was only one second old is significant because it implies that the energy density of regions separated by more than one light-second should be radically different. Therefore, the CMBR should not be so uniform. However, it successfully explains the origin of the observed fluctuations in matter and energy density: all of the particles within very small regions move towards one another due to gravity without effecting distant regions. This is how the first fluctuation in matter density originated. The clumpyness of matter was initially very slight; but over enormous time intervals (billions of years), gravity amplified this clumpyness to form the cosmic web, filaments, galaxy superclusters and clusters and groups, starts, planets, moons, comets, asteroids, and all of the large-scale structure and clumpyness we see in the universe today. On a medium size scale, the force of electromagnetism pulls and subsequently binds together atoms and molecules to form complex chemistry—the basis of all living organisms. This is the origin of structure and clumpyness on a medium size scale. The strong nuclear force binds together protons and neutrons in order to keeps atoms held together and form structure and clumpyness on an even smaller scale. The slight non-uniformities in matter density imparted by quantum fluctuations eons ago and three fundamental forces (gravity, electromagnetism, and the strong force), together, seeded and eventually developed all of the structure and clumpyness in the cosmos we see today.

This article is licensed under a CC BY-NC-SA 4.0 license.

References

1. David Butler. "Classroom Aid - Cosmic Inflation". Online video clip. YouTube. YouTube, 11 September 2017. Web. 11 November 2017.

2. Gott, Richard. The Cosmic Web: Mysterious Architecture of the Universe. Princeton University Press, 2016.

3. Goldsmith, Donald; Tyson, Niel. Origins: Fourteen Billion Years of Cosmic Evolution. Inc. Blackstone Audio, 2014.

How initial states of definite energy change with time

Let’s ask the question: do the wavefunctions which take the particular form \(\psi(x,t)=F(t)G(x)\) satisfy Schrödinger’s time-dependent equation? We can answer this question by substituting \(\psi(x,t)\) into Schrödinger’s equation and check to see if \(\psi(x,t)\) satisfies Schrödinger’s equation. Schrödinger’s time-dependent equation can be viewed as a machine where if you give me the initial wavefunction \(\psi(x,0)\) as input, this machine will crank out \(\psi(x,t)\) and tell you how that initial wavefunction will evolve with time. We are asking the question: if some wavefunction starts out as \(\psi(x,0)=F(0)G(x)\), is \(\psi(x,t)=F(t)G(x)\) a valid solution to Schrödinger’s equation? We know that \(\psi(x,0)=F(0)G(x)\) is a valid starting wavefunction. It is easy to imagine wavefunctions \(\psi(x,0)=\psi(x)=F(0)G(x)=(constant)G(x)\); this is just a wavefunction \(\psi(x)\) that is a constant times some function of \(x\). But does \(\psi(x,t)=F(t)G(x)\) satisfy Schrödinger’s equation? Let’s substitute it into Schrödinger’s equation and find out

$$iℏ\frac{∂}{∂t}(F(t)G(x))=\frac{-ℏ^2}{2m}\frac{∂^2}{∂x^2}(F(t)G(x))+V(x)F(t)G(x).\tag{1}$$

This can be simplified to

$$iℏG(x)\frac{d}{dt}(F(t))=F(t)(\frac{-ℏ^2}{2m}\frac{d^2}{dx^2}G(x)+V(x)G(x)).\tag{2}$$

The term \(\frac{-ℏ^2}{2m}\frac{d^2}{dx^2}G(x)+V(x)G(x)\) is just the energy operator \(\hat{E}\) acting on \(G(x)\); thus we can rewrite Equation (2) as

$$iℏG(x)\frac{d}{dt}F(t)=F(t)\hat{E}(G(x)).\tag{3}$$

Let’s divide both sides of Equation (3) by \(G(x)F(t)\) to get

$$\frac{iℏ}{F(t)}\frac{d}{dt}F(t)=\frac{\hat{E}(G(x))}{G(x)}.\tag{4}$$

Since the left hand side of Equation (4) is a function of time and the right hand side of Equation (4) is a function of position, it follows that

$$\frac{iℏ}{F(t)}{d}{dt}F(t)=\frac{\hat{E}(G(x))}{(G(x)}=C\tag{5}$$

where \(C\) is a constant. From Equation (5), we see that

$$\hat{E}(G(x))=CG(x).\tag{6}$$

In other words, \(G(x)\) must be an eigenfunction of the energy operator \(\hat{E}\) with eigenvalue \(C=E\). Thus, we can rewrite Equation (6) as

$$\hat{E}(\psi_E(x))=E\psi_E(x).\tag{7}$$

What this means is that the initial wavefunction \(\psi(x,0)=F(0)G(x)=(constant)\psi_E(x)\) must be an energy eigenfunction to satisfy Schrödinger’s equation. (Note that a constant times a wavefunction corresponds to that same wavefunction.) We also see from Equation (5) that

$$\frac{d}{dt}(F(t))=\frac{E}{iℏ}F(t).\tag{8}$$

The solution to Equation (8) is

$$F(t)=Ae^{Et/iℏ}.\tag{9}$$

It is easy to verify this by plugging Equation (9) into Equation (8). \(\psi(x,t)=F(t)G(x)\) is indeed a valid solution to Schrödinger’s time-dependent equation if \(G(x)=\psi_E(x)\) and \(F(t)=Ae^{Et/iℏ}\). If a system starts out in an energy eigenstate, then the wavefunction will change with time according to the equation

$$\psi(x,t)=Ae^{Et/iℏ}\psi_E(x).\tag{10}$$

Schrödinger’s equation describes how a wavefunction evolves with time if the system is “undisturbed” without measuring the system. If we measure the energy \(E\) of a system and collapse its wavefunction to the definite energy eigenstate \(\psi_E(x)\), and then simply just “leave the system alone” for a time \(t\) without disturbing it, the wavefunction \(\psi_E(x)\) will change to \(Ae^{Et/iℏ}\psi_E(x)\). But multiplying \(\psi_E\) by \(Ae^{Et/iℏ}\) does not change the expectation value \(〈\hat{L}〉\). Also, if \(|\phi⟩\) is any arbitrary state, then \(|〈\phi|\psi_E(x)〉|^2\) is the same as \(|〈\phi|Ae^{Et/iℏ}\psi_E(x)〉|^2\). Thus the probability of measuring any physical quantity associated with the system does not change with time. For an atom in its ground state with a definite energy \(E_0\) which is not disturbed, \(〈\hat{L}〉\) will not change with time. You are equally likely to measure any particular position, momentum, angular momentum, and so on at any time \(t\).

CRISPR-CAS9 Gene Editing

This article is a technical discussion of how the CRISPR/CAS9 system can be used to modify the genome of a mouse.

P-Series Convergence and Divergence

In this article, we discuss various different ways to test whether or not a p-series diverges or converges.

Derivation of Snell's Law

The law of reflection had been well known as early as the first century; but it took longer than another millennium to discover Snell's law, the law of refraction. The law of reflection was readily observable and could be easily determined by making measurements; this law states that if a light ray strikes a surface at an angle \(θ_i\) relative to the normal and gets reflected off of the surface, it will be reflected at an angle \(θ_r\) relative to the normal such that \(θ_i=θ_r\). The law of refraction, however, is a little less obvious and it required calculus to prove. The mathematician Pierre de Fermat postulated the principle of least time: that light travels along the path which gets it from one place to another such that the time \(t\) required to traverse that path is shorter than the time required to take any other path. In this lesson, we shall use this principle to derive Snell's law.